Nowadays, big data is being integrated into systems requiring processing a vast amount of information from (geographically) distributed data sources while fulfilling the non-functional properties inherited from the domain in which analytics are applied, for example, smart cities or smart manufacturing domains.

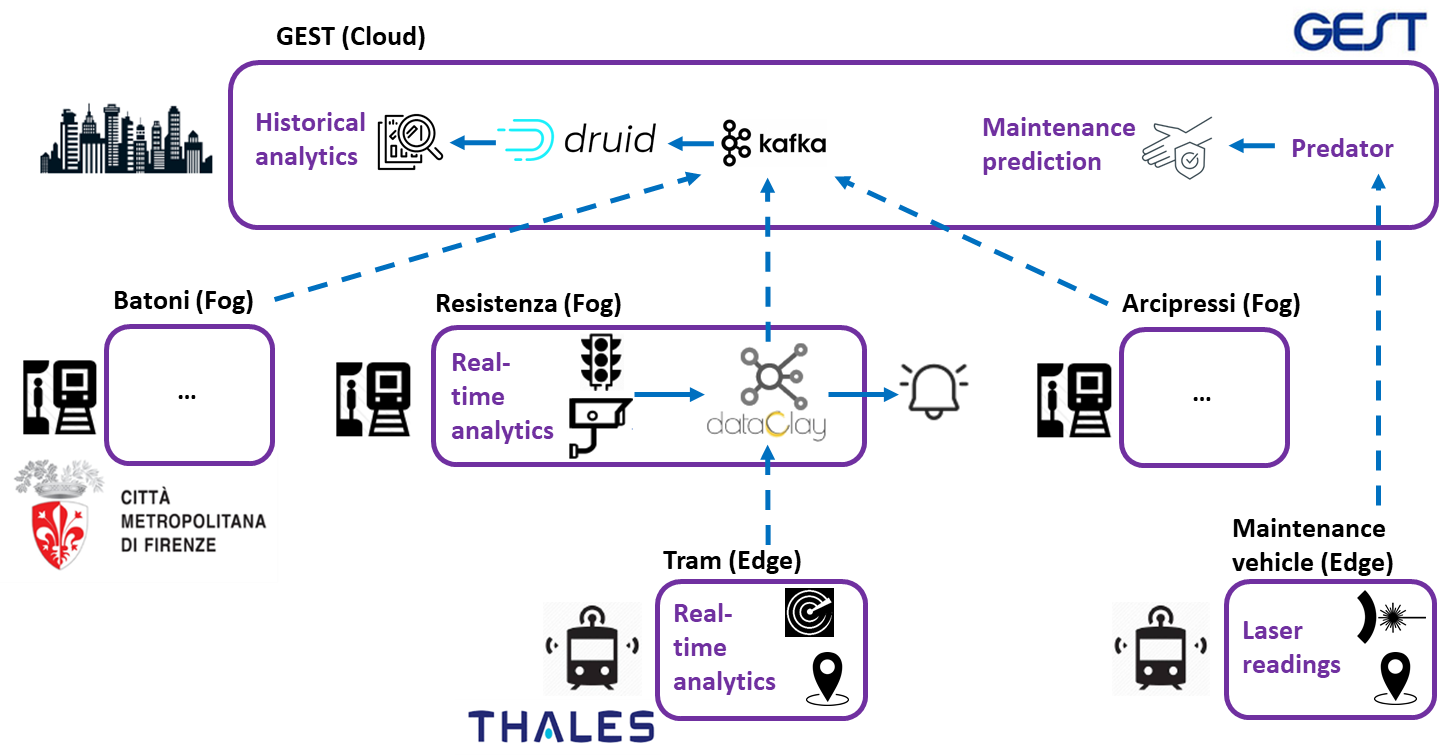

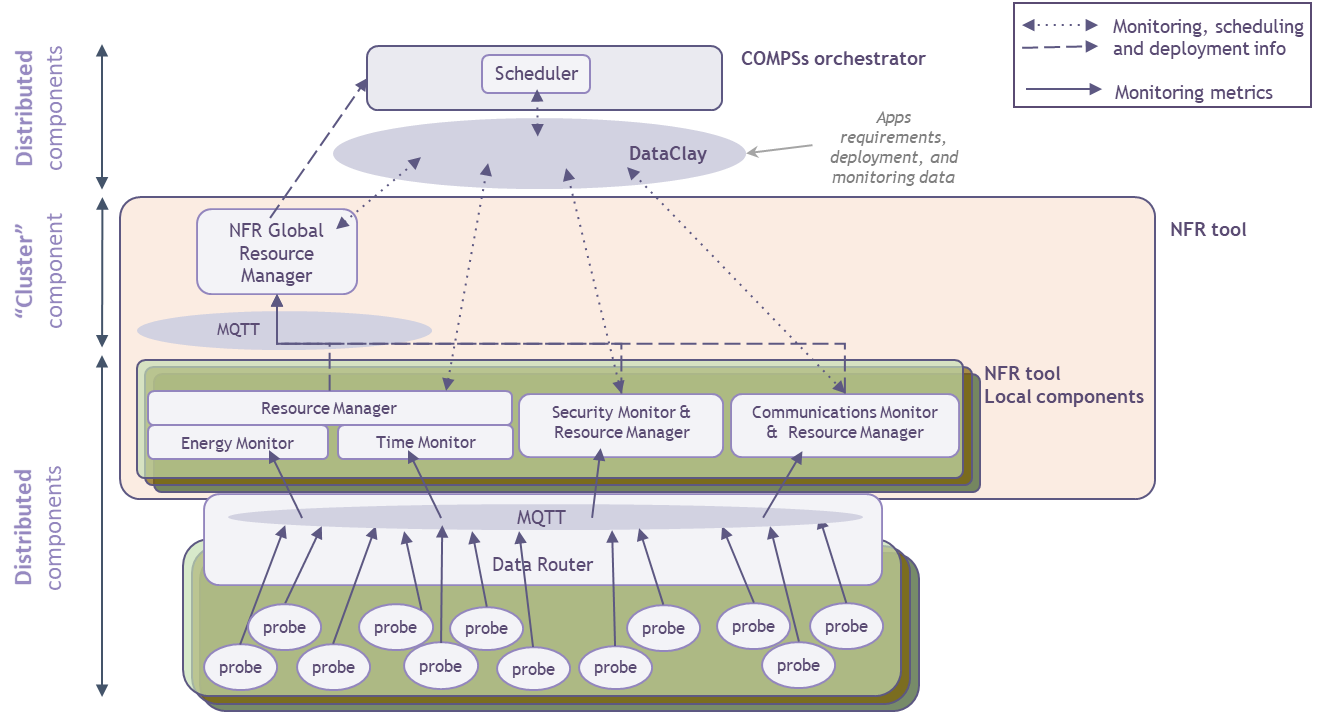

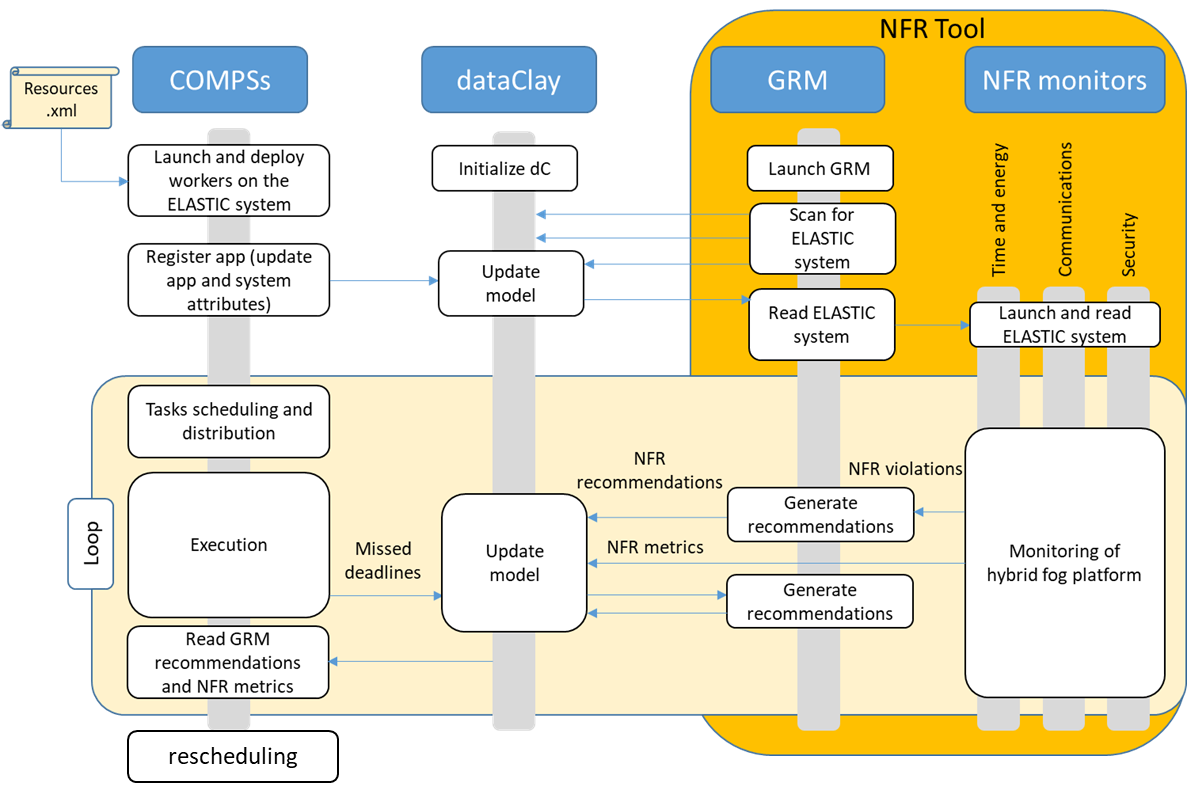

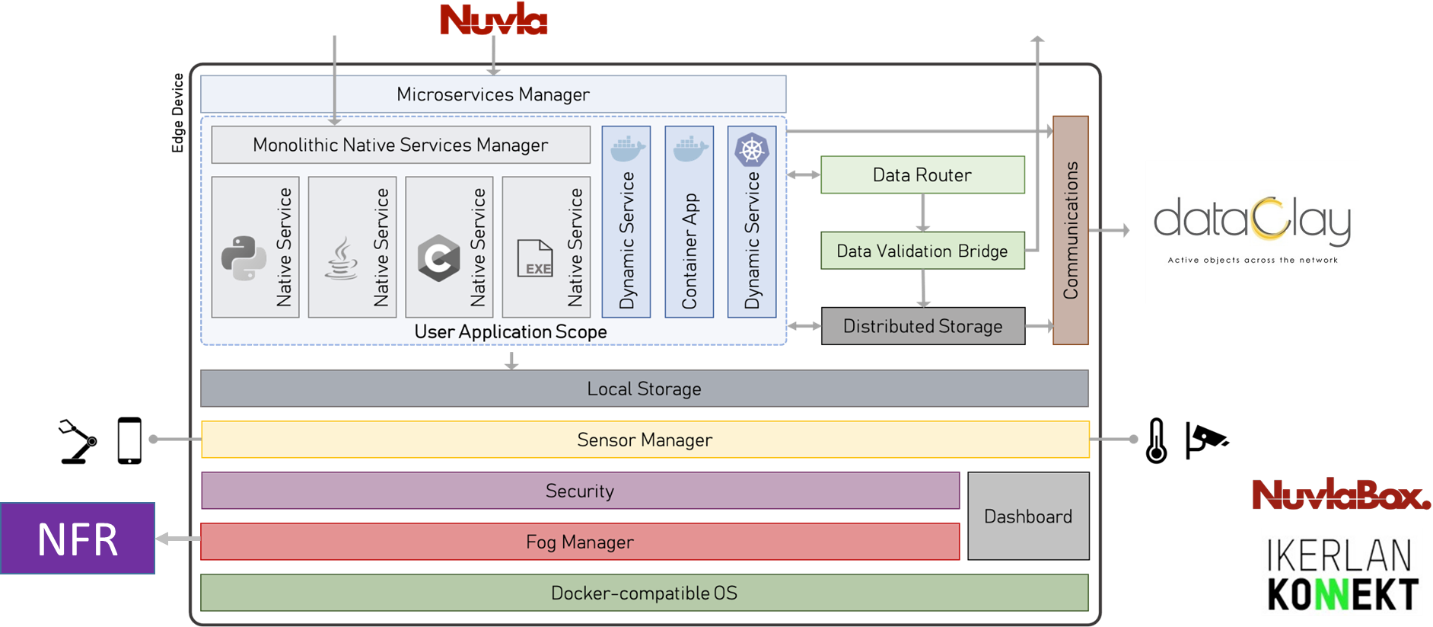

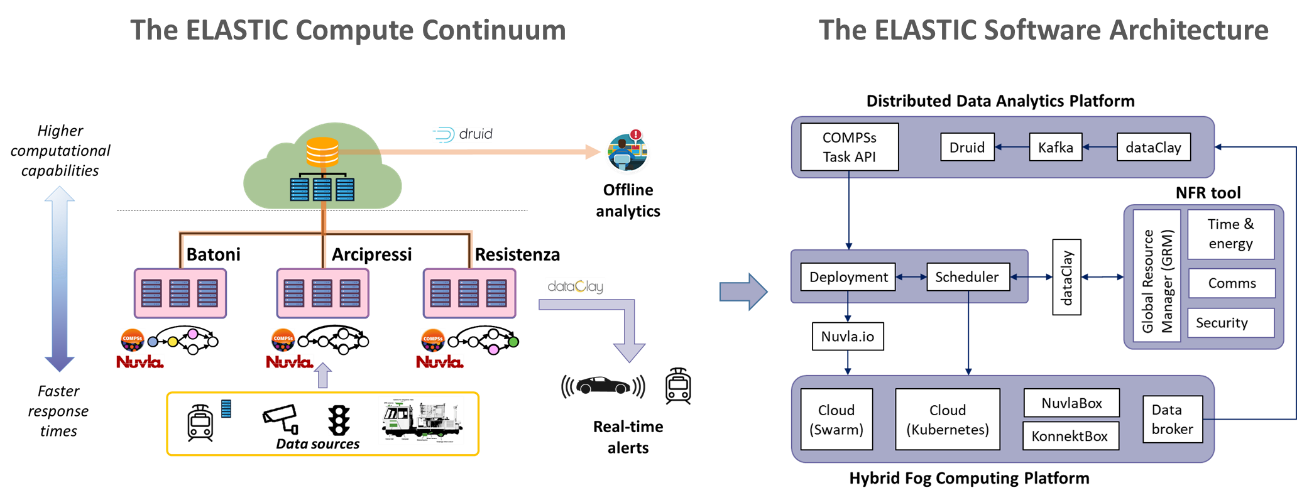

ELASTIC has designed a novel software architecture to addresses the challenge of efficiently distributing extreme-scale big-data analytics across the compute continuum, from edge to cloud while providing guarantees on the non-functional requirements of real-time, energy, communications and security spanning from the smart mobility domain.

To that end, the ELASTIC software ecosystem incorporates different components from multiple information and communications technologies (ICTs), including distributed data analytics, embedded computing, internet of things (IoT), cyber-physical systems (CPS), software engineering, high-performance computing (HPC), and edge and cloud technologies.

The integration of all these components into a single development framework enables the design, implementation and efficient execution of extreme-scale big-data analytics. To achieve this goal, it has incorporated a new elasticity concept across the compute continuum, with the objective of providing the level of performance needed to process the envisioned volume and velocity of data from geographically dispersed sources at an affordable development cost whilst guaranteeing the fulfilment of the non-functional properties inherited from the system domain.

The ELASTIC SA, validated in three smart mobility use cases in the city of Florence, has achieved:

- Integration and optimization of advanced data analytics methods into a complex workflow for both real-time and offline analytics, executed across the edge/cloud continuum and collecting extreme data from multiple sources from both the tramway network and the city infrastructure.

- Up to 50% reduction in SW development costs, bringing down the development time for the smart city use case from 2 months to 2 weeks.

- Up to 38% reduction of the analytics response time through advanced scheduling for distributed execution, taking into account data dependencies, the quality of communication links and real-time requirements.

The figure below shows a schematic view of the compute continuum considered in the ELASTIC use cases and an overview of the ELASTIC software architecture stack, showing the key software components.

Overall, the ELASTIC SA consists of four layers, each tackled by a dedicated Work Package (WP) of the project, further described below. All software components have been added to the dedicated ELASTIC GitLab repository.